QUANTUM MECHANICS MEETS DATA SCIENCE

Lyra Gao, Frontiers of Science Fall 2020

Join me as I explore the world of quantum computing—its foundations and frontiers.

““In God we trust. All others must bring data.””

Quantum computing was born in the 1980s (in part) as the brainchild of Paul Benioff, who was in turn born in Pasadena, California; beyond their Californian blood, quantum computers are now being researched and instantiated around the world. What are these objects, and why are they interesting?

Here is a very quick, general overview!

SUPERPOSITION AND QUBITS

You’re looking at the physics world’s prom queen. This image is the result given by the double-slit experiment, which was, by popular vote, determined to be the most beautiful experiment in physics [1]. It tells us that electrons are wavy.

This is one of the fundamental insights of quantum mechanics: That, at small scales (like electron-small), the positions of particles are strange entities called probability waves. The idea that ordinary, physical objects have an inherently probabilistic interpretation to them is counterintuitive, and it’s what the dead cat was about: Before we observe something, it simultaneously takes on several states. Before we open the box, the cat is both alive and dead. This puzzling phenomenon is called superposition. [2]

Another insight of quantum mechanics is entanglement, which is when quantum objects (read: small things) can become ‘related,’ such that observing the state of one will simultaneously determine the state of another [3]. Einstein called this “spooky action at a distance,” because the fact that it doesn’t require contact and is simultaneous is a really big deal [4]. Let’s ignore how big of a deal that is (if you can’t).

Quantum computing is about implementing these two insights into computers.

quantum mechanics and data science

A quantum binary digit, or a qubit, is the basic unit of quantum information. This is in contrast to a regular binary digit, or a bit, the basic unit of information for classical computers [5]. A bit is either 0 or 1; a stream of bits (like 1000101) represents information that can be interpreted in many ways—as an image, an audio file, as text, etc.—depending on, well, how we want to interpret it.

A qubit is different from a bit, since 1) a bit can only represent a 0 or 1, but a qubit can be a superposition of both, and 2) while ‘observing’ a bit doesn’t change what it is, ‘observing’ a qubit causes it to take on either 0 or 1 [6].

If such an object exists, it makes sense to talk about computers that use them. Why is this important? Because the classical world is deterministic: there is no randomness, and any random-resembling event is just a simulation given by a good pseudo-random number generator [7]. Qubits are truly random because they exist in a superposition until you measure them. Quantum computers would be able to simulate true randomness, and make true nondeterministic guesses.

Also, because of entanglement, measuring one qubit that's entangled with another will determine measurements on the other entangled qubit; because of this faster-than-light travel, computers which use qubits and quantum information will be able to gain a speed-up.

The bottom line: By using quantum principles, computers can use true randomness and maybe gain a speed-up. The speed-up is especially helpful for problems related to optimization, which can take thousands of years for classical computers to solve. This has tremendous applications for almost everything—including financial trading, drug development, and artificial intelligence.

some more on qubits (bc it’s cool)

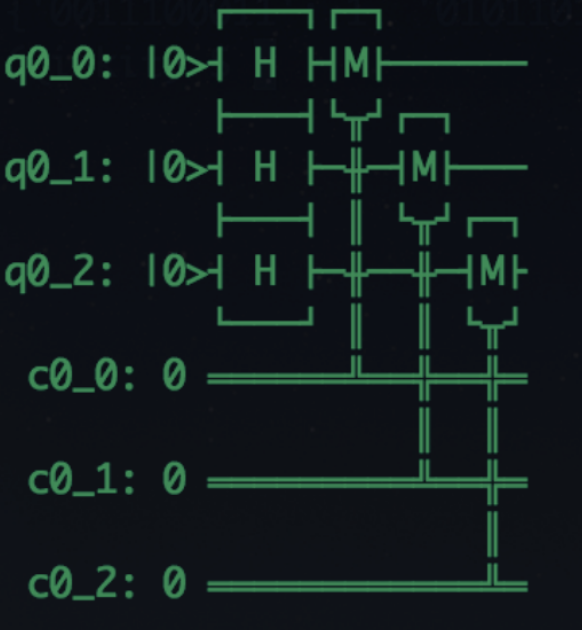

Qubits are represented in Dirac notation [8], where the probability of the qubit being a certain state is written as |ψ⟩ = [x1, …, xn]. The two basic qubits are the qubit which always becomes a 0 when observed, |0⟩ = [1,0], and the qubit which always becomes a 1 when observed, |1⟩ = [0,1]. To represent a superposition of 1 and 0, with a 50% probability of being either, we add |0⟩ and |1⟩ but normalize it so that total distance is 1: |ψ⟩ = 1/√2 |0⟩ + 1/√2 |1⟩ = [1/√2,1/√2]. To make meaning from this, we have to introduce the idea of quantum gates (analogous to gates in regular Boolean circuits) [9].

You can mess with these in Python by getting cloud access to IBM’s quantum computers here.

APPLICATION: FRONTIERS OF QUANTUM COMPUTING

So where does this bring us? To Google: It’s not just the king of your new tab page—as of 2019, it’s also quantum royalty. In 2019, they claimed to achieve a feat called quantum supremacy, when their quantum computer Sycamore performed a calculation that would have taken thousands of years for classical computers—although the actual validity of this claim is still under active investigation [10].

Another major player in the quantum computing scene is IBM, who made 14 quantum computers accessible via cloud for developers [11]. But we’re still in the fledgling years of quantum computing—both the largest of IBM's quantum computers, and Google's Sycamore, have “just” 53 qubits compared to the trillions of bits that are in the device you’re reading this with [12]. This is because there are many physical obstacles to creating quantum computers, such as the delicate environment that needs to be created to store and manipulate qubits.

extension: encryption

Powerful quantum computers are probably not Godot—it looks like they’ll get here eventually. If they do, here’s a potential second-order effect: Password decryption.

Encryption is based on functions that are easy to compute one way but difficult to reverse, like multiplying two prime numbers together, which is relatively easy to do but immensely time-consuming to undo [13]. Encryption algorithms were never unbreakable; they were just too time-consuming for classical computers to breach. But this wouldn’t be true for quantum computers, which as we’ve seen in Sycamore, can solve quickly the problems that would take thousands of years for classical computers.

Should we be worried? Yeah, maybe. But maybe quantum-resistant encryption schemes exist [14]. More here.

references

1: https://iopscience.iop.org/article/10.1088/2058-7058/15/9/22

2: https://quantumatlas.umd.edu/entry/superposition

3: https://quantumatlas.umd.edu/entry/entanglement

4: https://www.ncbi.nlm.nih.gov/books/NBK538701/

5: https://quantumatlas.umd.edu/entry/qubits

6: https://www.nature.com/articles/543S2a

7: https://www.quantamagazine.org/how-to-turn-a-quantum-computer-into-the-ultimate-randomness-generator-20190619/

8: https://docs.microsoft.com/en-us/quantum/concepts/dirac-notation

9: https://qiskit.org/textbook/ch-states/single-qubit-gates.html

10: https://www.sciencenews.org/article/google-quantum-supremacy-claim-controversy-top-science-stories-2019

11: Ibid.

12: Ibid.

13: https://www.technologyreview.com/2019/05/30/65724/how-a-quantum-computer-could-break-2048-bit-rsa-encryption-in-8-hours/

14: https://www.tandfonline.com/doi/full/10.1080/23742917.2016.1226650